To handle duplicate content without user-selected canonicals in Google Search, employ methods like setting proper 301 redirects, using the "noindex" tag for duplicate pages, & optimizing your sitemap. Implement structured data to clarify content relevance & use consistent internal linking to signal the preferred version. Regularly audit your site for duplicate content issues & update your content strategy to ensure original, high-quality material that can naturally reduce duplication. Utilizing Google Search Console can help in identifying & mitigating duplicate content issues efficiently.

Handling Duplicate Content Without User-Selected Canonicals in Google Search. Struggling with duplicate content? Discover simple strategies to manage it effectively, even without user-selected canonicals, & boost your site's search visibility!

Duplicate content refers to identical or highly similar content appearing on multiple URLs. This can dilute page authority in search rankings. Duplicate content can occur for various reasons: different versions of web pages, printer-friendly formats, or identical product descriptions across e-commerce platforms. Google's algorithms aim to provide users with valuable & unique content. Therefore, they may struggle to determine which URL should rank higher in search results. Without user-selected canonical tags, search engines rely more heavily on other signals to decide which version to prioritize.

In this context, Google may utilize several factors, including link equity, content quality, & user engagement metrics. Ensuring a clear & concise strategy around duplicate content is crucial for any website owner. This involves understanding how search engines interpret duplicate content & identifying effective methods to alleviate issues arising from it.

Over time, search engines have adapted to the challenges presented by duplicate content. Initially, Google's algorithm faced difficulties in accurately indexing web pages with identical information. Early solutions focused on penalizing websites for duplicating content. Be that as it may, this approach proved detrimental to many legitimate websites that inadvertently displayed duplicated data. Consequently, Google began refining its algorithms to identify patterns in data rather than punishing sites outright.

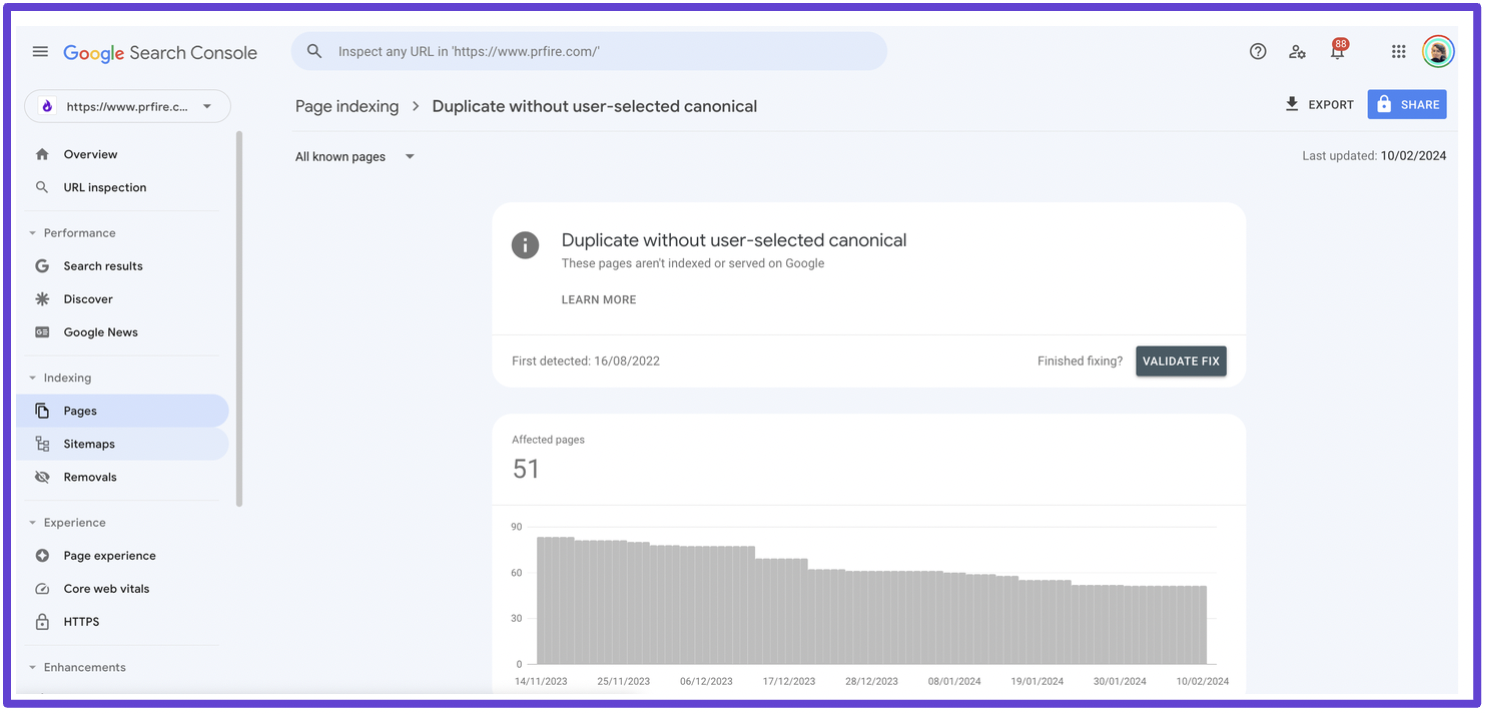

As technology progressed, different strategies emerged to address content duplication more effectively. Google introduced various tools, like the URL Inspection tool & Search Console, enabling webmasters to monitor their site’s performance. These developments empowered website owners to take actionable steps towards resolving duplicate content without the need for user-generated canonical tags. Thus, the focus shifted from punitive measures to proactive management of content.

Implementing effective strategies to manage duplicate content involves several best practices. First, website owners should conduct regular audits of the content they publish. Utilize tools that identify duplicate pages & provide insights into which URLs compete against each other. This ensures that necessary actions can be taken to minimize duplication. Next, optimizing page titles, headers, & meta descriptions can significantly reduce confusion for search engines about which page to prioritize.

Redirecting users from duplicate URLs to the original page can also help. This can be achieved using 301 redirects. Such redirects notify search engines of the preferred version of a page while ensuring users maintain access to relevant content. Alongside these measures, consider employing unique internal linking strategies. This strengthens the authority of preferred pages & makes it clearer for search engines about which content holds more value.

Addressing duplicate content issues effectively benefits both search visibility & user engagement. When search engines rank the right pages higher, users can easily find relevant information without sifting through irrelevant results. This leads to higher click-through rates & lower bounce rates, enhancing user satisfaction. On top of that, when content is optimized correctly, it establishes a more cohesive brand image, reinforcing authority & trustworthiness.

Ultimately, this strategy also positively affects SEO rankings. With Google prioritizing well-structured, original content, websites that actively manage duplicates may experience improvements in visibility & traffic. A clear understanding of how duplicates may impact user experience emphasizes the importance of addressing such challenges systematically.

Several challenges arise when dealing with duplicate content issues. One notable difficulty involves identifying the cause of duplication accurately. Various factors, including content management system settings & syndication practices, can lead to unintentional duplication. Consequently, identifying these root causes demands thorough investigation & technical know-how.

And don't forget, implementing effective strategies requires ongoing commitment & monitoring. Keeping track of search indexing changes & adapting accordingly can prove taxing for many site owners. Nevertheless, solutions exist. Employing digital tools can assist in detecting duplicate content, allowing for timely adjustments. Search Console can alert website managers about identified errors. Building a dedicated content audit schedule can facilitate better management of potential duplication challenges.

Looking forward, several advancements may shape how duplicate content is addressed. With machine learning technologies gaining traction, search engines could become more adept at understanding content context & relevance. This shift may lead to improved handling of duplicates without solely relying on canonical tags. On top of that, improving AI algorithms could facilitate dynamic content assessments, allowing algorithms to implement user-centric adjustments based on individual preferences.

As websites increasingly adopt more complex structures, staying informed on evolving best practices will remain paramount. Consequently, webmasters should proactively educate themselves on emerging strategies for duplicate content management. Engaging with industry experts through webinars or online forums can offer valuable insights into cutting-edge techniques.

Duplicate content can lead to confusion in Google Search. It often makes it hard for search engines to determine which version of a page they should rank. This can dilute the SEO efforts of a site. Recognizing duplicate content is vital to maintain a site's authority. Ensuring that search engines find the best version of a page is key.

When multiple pages offer similar content, it creates issues. Google may choose one at random or may even filter pages. This can result in less visibility for all versions. Addressing duplicate content is essential for effective search ranking.

By resolving duplicates, site owners can enhance user experience. When users find the correct version of content, they can engage better. This can lead to increased traffic & improved rankings. Websites with clear canonical policies benefit from effective indexing by Google.

Knowing how to identify duplicate content is central to SEO practices. Several tools exist to analyze if your site has duplicate content. Tools like Screaming Frog, Sitebulb, or Google's Search Console can help. Checking reports for duplicate title tags, meta descriptions, or headings is crucial.

Using the search command in Google can also help. Entering queries like “site:yourdomain.com” followed by keywords can show duplicates. Manual checks can be time-consuming but necessary for smaller websites.

Regular audits of content help pinpoint problems early. Set a schedule to review & update your content regularly. This proactive approach saves time & effort in the long run.

Google has specific guidelines for dealing with duplicate content. They prioritize user-focused content over simply ranking tactics. Content duplications may not always be harmful, but they can cause problems.

If Google detects duplicate content, it may not penalize the site but may filter it. Therefore, ensuring uniqueness in content is advisable. Google prefers original content that provides distinct value to users.

According to Google’s guidelines, whenever possible, use canonical tags. Canonical tags signal to search engines which version of a page is the preferred one. They guide Google to the main version of any duplicate pages, improving the indexing process.

“Handling Duplicate Content Without User-Selected Canonicals in Google Search is vital for maintaining site integrity.” Ms. Onie Haag DDS

Many websites use content management systems (CMS) like WordPress. These platforms often generate duplicate content accidentally. It is helpful to manage settings that affect URL structure & content visibility. Addressing these can reduce duplicate content risks.

For example, WordPress default settings can sometimes create duplicate URLs. Changing permalink settings is essential in this case. Optional settings like “/?p=123” should be avoided. Instead, favor structured permalinks based on post names.

And another thing, controlling pagination can help. Use rel="next" & rel="prev" in the header of paginated pages. This small adjustment can help Google interpret pagination correctly. Always verify that pagination does not create duplicate content.

One effective method of handling duplicates is using 301 redirects. This tells search engines that a page has permanently moved. Instead of showing multiple versions, visitors are directed to the intended page. This action consolidates link equity & improves user experience.

301 redirects should be correctly set up in the server configurations. This avoids missed opportunities for traffic & indexing. Reassess redirects after any technology changes or updates. Keeping track of redirects can ensure smooth user navigation & search results.

Also, testing redirects using tools like Google Search Console is beneficial. Making sure they route users correctly can prevent unnecessary duplicates. Consistent monitoring can lead to better site performance.

URL parameters can cause duplicate content problems. They often appear when users sort or filter content. An example includes e-commerce sites, where users filter items by color or size. Without proper management, these alternative URLs may appear in search results.

Google Search Console provides URL parameter tools. You can tell Google how to handle these parameters. Indicate whether they change content or are just for tracking. This reduces the chances of duplicate indexing.

Developing a clear strategy for managing URL parameters can minimize duplicate issues. Doing so builds organized site structure. It ensures that Google focuses on the main content.

Content syndication allows articles to appear on multiple sites. But it may lead to duplicate content concerns. To prevent issues, it is important to follow best practices for syndication.

When syndicating content, always use canonical tags. This marks which version is the preferred one. It tells Google which site should receive the credit for the content. Without this tag, you risk diluting SEO strength.

And don't forget, where possible, provide a summary or excerpt on syndicating sites. Link back to the original post. This strategy may improve organic traffic while avoiding duplicates.

Doing this maximizes the benefits of syndication without hurting your site's rankings.

Regularly updating content can help combat duplicates. Fresh content shows Google that a site is active. It may improve indexation while pushing old duplicates down results pages. Always review older posts for potential updates or revisions.

When rewriting pages, avoid merely rephrasing text. Aim to expand & provide more value. Adding new perspectives, examples, or data can make content unique.

Updating regularly can prevent the stagnation of your site's content. This practice can positively affect rankings & user engagement.

Monitoring duplicate content should be ongoing. Utilizing analytic tools effectively allows for easy tracking. Schedule audits at consistent intervals. Examples include monthly or quarterly checks.

Google Search Console can provide data about indexing issues. Pay attention to any alerts regarding duplicate content. This active approach aids in maintaining a clean index.

| Monitoring Tools | Purpose |

|---|---|

| Google Search Console | Alerts on indexing problems |

| Screaming Frog | Find potential duplicates |

| Semrush | SEO analysis & tracking |

Consider incorporating feedback sessions with your team. Ensure everyone understands the importance of managing duplicate content. This can lead to a unified approach in dealing with it.

Creating a clear content strategy is crucial. This should prioritize unique content creation over duplicating existing material. Aim to produce original insights & valuable information.

Documenting guidelines for content creation helps maintain consistency. Include rules for titles, headings, & meta descriptions. This can minimize the chances of duplicates arising during content production.

Investing in original content fosters trust with your audience. Engaging content can significantly enhance user experience & site traffic.

404 errors can occur when pages are removed or moved without a redirect. These issues can lead to duplicate content indirectly. To mitigate this, always have a strategy to handle 404s.

Custom 404 pages can guide users towards relevant content. Provide links to popular posts or categories on your site. This encourages users to stay engaged, even when a specific page is missing.

Regularly check for & update any broken links. This can improve user experience while significantly reducing the chance of duplicates.

Duplicate content refers to blocks of content that appear on the internet in more than one location. This can be on different domains or on the same domain. Google may have problems figuring out which version to include in search results. It can hurt your search engine rankings if not managed well. Duplicate content can occur for various reasons. Copying content from one site to another is one cause. URL parameters can also lead to copies of similar content appearing on multiple pages.

This issue can reduce your site's SEO effectiveness. Search engines may struggle to decide which page to rank in search results. This can lead to traffic loss. It can also dilute visibility among your pages. If your site has multiple similar pages, traffic may split among them. Each page will get a smaller share.

Duplicate content can lead to lower rankings. Lower rankings mean fewer visitors. Sites may lose credibility & authority. It becomes harder to drive conversions. Therefore, managing duplicate content is critical.

There are various ways to manage duplicate content. One method is through technical SEO solutions. Using specific techniques helps search engines understand your site better. Each chosen method can help inform search engines of the preferred content version.

By optimizing your URL structure, you can reduce duplicate content issues. Clean URLs are easier for search engines to crawl. Make sure each URL is distinct & descriptive. Avoid using vague or generic terms.

301 redirects guide users & search engines to the correct page. If you move content, set up a 301 redirect from the old URL to the new one. This process retains the SEO value of the original page. Always check your redirects regularly to ensure they are functioning.

Even without user-selected canonicals, you can implement canonical tags on your site. A canonical tag signals which version of a page is preferred. This helps search engines index the main version. Implementing this tag can protect your site from duplicate content penalties.

Review your site's technical SEO settings to reduce duplication. Ensure that your settings are properly configured. This includes your robots.txt file & sitemap. Optimize your settings to ensure search engines find what they need easily.

Creating unique content is vital. Each piece should serve its purpose. Focus on different angles or details in your writing. This will help reduce duplication naturally. Aim for originality in every post or page.

Syndication may cause duplication. Be selective with what you choose to syndicate. Use a rel=’canonical’ tag in syndicated content. This shows your original site retains authority over the content. This helps search engines know which version carries the main SEO weight.

Regular audits can help you identify duplicate content. Use tools like Google Search Console to find duplicated issues. Track your progress over time. By checking frequently, you can stay ahead of potential problems.

Duplicate content can confuse users. A confused user may leave your site for good. Provide clear pathways for navigation. Ensure users can easily find relevant content. Aim to create a seamless experience for every visitor.

You can use various tools to find duplicate content. Some popular tools include:

Google Search Console can provide insights. It helps identify content issues. You can monitor your website's performance regularly. This is critical for keeping duplicate content at bay. Learn more about Google Search Console here.

Analyze case studies to understand duplicate content impact. Study how different sites have managed their issues. This can provide valuable lessons. Look at successes & failures in the industry.

| Website | Outcome |

|---|---|

| Website A | Improved rankings after fixing duplication |

| Website B | Traffic declined due to lack of management |

"Utilizing a strategy for Handling Duplicate Content Without User-Selected Canonicals in Google Search is essential for online success." – Verlie Bins

As SEO evolves, strategies will change. Stay informed about best practices. Implement updated techniques regularly. The future may bring new tools & methods to manage duplication. Being proactive can lead to better site performance.

Follow industry blogs for the latest news. Join forums for discussions on SEO practices. Consistently educate yourself to stay ahead. You can find information about new tools here.

I remember my challenges with duplicate content. My website faced penalties for this issue. I learned valuable lessons along the way. Implementing solutions helped improve my site's performance. Today, I am more aware of management techniques.

Remember that eliminating duplicate content is essential. Regular monitoring will make a difference. Keep your content unique & useful. This will ensure better user engagement & SEO results.

Duplicate content refers to blocks of text or data that appear more than once across different URLs. This can create confusion for search engines regarding which version to index & display in search results.

Managing duplicate content is crucial because it can dilute page authority & negatively impact search rankings. It can also confuse users who might encounter similar content across multiple pages.

Common causes include URL variations due to tracking parameters, printer-friendly versions of pages, & website content syndication. And another thing, some content management systems may generate duplicate pages inadvertently.

Tools like Google Search Console, Copyscape, or Screaming Frog can help identify duplicate content. They can crawl your site & highlight pages with similar or identical text.

Canonical tags are HTML elements that help indicate the preferred version of a webpage when there are multiple versions. They inform search engines which page to prioritize in search results, reducing the impact of duplicate content.

If user-selected canonicals are not an option, you can use other methods such as 301 redirects to point to the preferred version of a page, or modify your site's architecture to minimize duplicate pages.

Ignoring duplicate content can lead to poorer search engine rankings, reduced organic traffic, & a confusing experience for users visiting your site. It can also impact the overall credibility of your site.

To prevent future duplicate content issues, it’s essential to implement a solid content strategy, use unique titles & descriptions, & ensure proper URL structures. Regular content audits can also help maintain site integrity.

Google may choose one version of duplicate content to index, potentially excluding others from search results. This can lead to reduced visibility for the non-preferred copies, impacting website performance.

301 redirects permanently forward users & search engines from one URL to another. Using them helps consolidate page authority & focus traffic on the preferred version of a webpage.

Yes, using noindex tags can instruct search engines not to index certain pages. This can be effective for preventing duplicate content from appearing in search results while keeping the pages available for users.

🎉 Biggest Black Friday Deal Ever!

MASSIVE 80% OFF

Unlock unlimited AI power across every plan.

Offer Ends In:

Ends December 10

Use Code: BLACKFRIDAY80