Monitoring Google Search Console Crawl Reports is essential for enhancing SEO insights. It provides critical data on how Google bots interact with your website, highlighting issues like crawl errors, redirect loops, & indexability concerns. By analyzing crawl stats & patterns, you can identify which pages are being prioritized & optimize your site structure accordingly. Regularly reviewing these reports enables you to fix technical issues, improve page speed, & ensure all valuable content is indexed effectively, ultimately boosting your site’s visibility & search engine rankings.

Monitor Google Search Console Crawl Reports for Better SEO Insights. Boost your SEO insights by monitoring Google Search Console crawl reports. Learn simple tips to understand your website's performance & improve visibility!

Google Search Console Crawl Reports serve as essential tools for website owners. They provide valuable insights into how search engines interact with your site. By monitoring these reports, you can gain knowledge about any crawling issues that may prevent your pages from being indexed effectively. Search engines, like Google, use bots to crawl web pages. This process helps them index content accurately, ensuring relevant results appear for user queries.

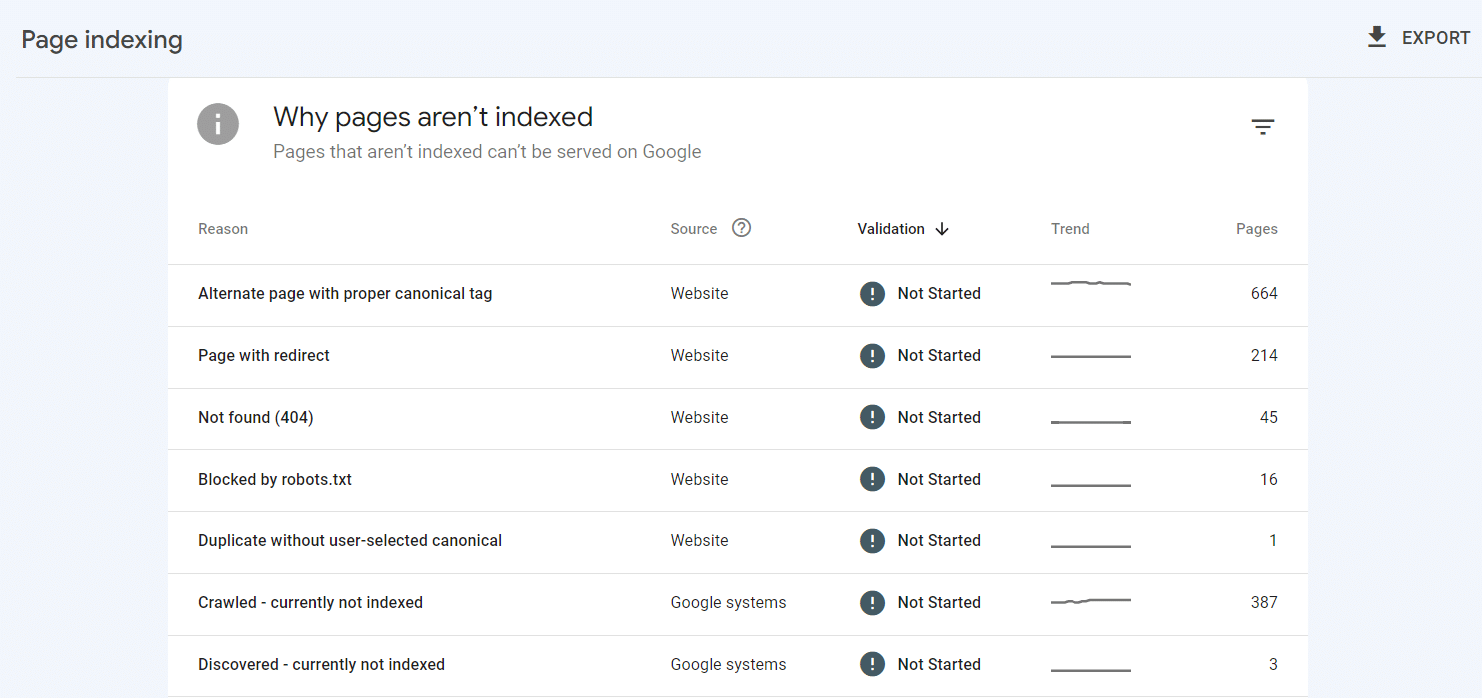

Crawl Reports highlight technical aspects, including errors encountered by search bots. For instance, 404 errors signify non-existent pages, while server errors may indicate that your site is temporarily unreachable. These insights enable you to address potential issues proactively. And don't forget, the reports include metrics such as crawl frequency & the number of pages crawled over a specified timeframe. This information allows you to assess whether search engines are efficiently accessing your content.

Keeping tabs on these reports aids in enhancing your overall site performance. Adjustments can be made based on the feedback provided by the reports. Therefore, monitoring Crawl Reports is not merely an analytical activity; it profoundly impacts your SEO strategy & website visibility on search engine results pages (SERPs).

The origins of Google Search Console date back to the launch of Google Webmaster Tools in 2005. Initially, it served basic functionalities such as monitoring site performance & understanding errors. Over the years, Google revamped this tool into what we recognize today as Google Search Console, enhancing its capabilities significantly.

As more users began leveraging the tool, features expanded to include Crawl Reports. These reports provided insights into the technical health of websites, spotlighting crucial details like crawl errors, submission errors, & site performance. By implementing user feedback, Google continuously improved functionality, making it user-friendly & informative.

Today, Crawl Reports have become an integral component for any SEO strategy. Their historical progression reflects the growing need for in-depth SEO understanding. Website owners can now rely on advanced metrics & diagnostic tools, further bridging gaps that once existed in website optimization. This evolution showcases how digital marketing adapts to changing technologies.

Adopting Google Search Console Crawl Reports starts with verifying your website within the tool. Once verification completes, begin exploring the Crawl section to identify key metrics. Focus on crawl errors first, addressing 404 & server errors to ensure a smoother user experience. Assign priority to fixing critical issues that affect your site's accessibility.

Next, leverage the coverage report. It identifies valid pages & any discrepancies preventing your content from being indexed. Understanding this report involves a two-fold approach: fix errors while optimizing the content on valid pages to improve ranking potential.

Regularly schedule audits for your website’s crawl activity. Setting reminders can help you consistently monitor changes & adjust your strategies accordingly. And another thing, employing the "URL Inspection Tool" allows for real-time checks of individual URLs. This can help validate indexing status & pinpoint specific issues. Combine these methods to develop a comprehensive strategy utilizing Google Search Console effectively.

Regular monitoring of Google Search Console Crawl Reports yields numerous benefits for your SEO strategy. First, it enhances your ability to identify technical issues quickly. By catching errors early, you can prevent potential declines in rankings or traffic. Consistent monitoring means you can enjoy a proactive approach rather than a reactive one.

On top of that, these reports provide insights into how search engines view your content. Understanding this can lead to optimization opportunities, allowing you to fine-tune your website & content for better visibility. By analyzing crawl frequency, for instance, you gain insight into your site's activity, helping you schedule updates & new content more strategically.

In addition, detailed insights assist in resource allocation. You can prioritize which pages require immediate attention or optimization, saving time & focusing efforts where needed most. The streamlined process of addressing crawl-related issues improves overall site health, fostering a robust user experience.

While utilizing Google Search Console Crawl Reports can be beneficial, users may encounter challenges. One common issue involves misunderstanding crawl errors, which can lead to improper attempts at resolution. To combat this, familiarize yourself with the different types of errors. Resources from Google can clarify definitions & provide remediation methods.

Another hurdle lies in managing a large number of URLs. Website owners sometimes struggle to identify where issues originate, especially with vast sites. Segmenting your audits by focusing on specific sections or content types can simplify this process. Set clear priorities for which pages to monitor closely.

And another thing, connectivity issues can occasionally disrupt crawling processes. Ensure that your server operates reliably & can handle increased traffic. Analyzing server logs may reveal performance hiccups allowing you to address them efficiently. Addressing challenges quickly ensures your site maintains optimal search engine performance.

Looking forward, the landscape surrounding Google Search Console Crawl Reports will likely evolve. With advancements in artificial intelligence & machine learning, automated features may become more prominent. These enhancements could streamline error detection, allowing users to receive real-time alerts for critical issues.

And don't forget, as user experience continues to dictate SEO strategies, expectations for crawl reports will expand. Enhanced metrics focusing on user engagement may emerge. By incorporating details about how users interact with sites, Google could transform its reporting methodologies to provide even richer insights.

Ultimately, staying abreast of updates & trends in Google Search Console remains crucial for website owners. Embracing new functionalities will empower users to refine their SEO efforts. Monitoring Crawl Reports will consistently play a vital role in shaping future strategies.

| Stat | Description |

|---|---|

| Pages Crawled | Total number of pages crawled in a specific timeframe. |

| Crawl Errors | Pages that could not be accessed by crawlers. |

| Crawl Time | Average time taken to crawl a page. |

| Last Crawl Date | When Google last accessed a page. |

| Step | Action |

|---|---|

| Identify Error Type | Determine the type & cause of the error. |

| Create a Fix Plan | Outline what needs to be changed or fixed. |

| Implement Changes | Make necessary changes to resolve errors. |

| Test Changes | Check if the changes resolved the errors. |

| Monitor Progress | Regularly check for any recurrence of errors. |

“To succeed, one must be proactive & vigilant. Monitoring your crawl reports is key.” – Dr. Maverick Daniel

The Google Search Console Crawl Report is a vital tool for webmasters. This report helps monitor how Google reads a website. It shows important information. You can see how many pages Google crawls. You can find out about crawl errors too. This data is essential for SEO. It helps identify issues that may affect website ranking.

The Crawl Report includes various elements. It showcases URL errors, server errors, & pages that could not be indexed. Addressing these errors is crucial for SEO success. If these issues persist, they can lead to lost traffic. Analyzing this data can improve the performance of your site.

And another thing, the Crawl Report allows users to monitor the health of their websites. You can quickly identify problems that impact user experience. The faster you fix errors, the better your website performs. Ultimately, consistent monitoring leads to better SEO insights.

Crawl Reports offer beneficial insights for SEO strategies. Without this data, webmasters cannot understand how search engines view their sites. This leads to missed opportunities. Websites may struggle to rank well without adequate monitoring. Crawl Reports help bridge this gap.

Good SEO practices require regular checks. If your site has many crawl errors, it can affect visibility. Google might not index your pages well. This means users cannot find your content easily. Higher crawl efficiency often leads to improved rankings. Thus, monitoring these reports regularly is crucial.

On top of that, Crawl Reports can indicate how Google interprets your site. Knowing these insights helps make smart changes. These changes can optimize the site better for users. The ultimate aim is increased traffic. Successful SEO comes from understanding & utilizing these reports effectively.

Each component serves a particular purpose. Error URLs highlight pages that are not accessible. Server errors indicate issues with the hosting environment. Indexed pages show how many are available for search results. Requests per second can reveal how fast Google checks your site. Crawl rate limit shows the maximum number of pages Google accesses. Understanding these components guides your optimization strategy.

Accessing Google Search Console is straightforward. Sign in with your Google account. Then, select your property. Click on the “Settings” icon in the sidebar. Under “Crawl,” you will find crawl data. Exploring the different reports helps in monitoring effectiveness. Each section contains valuable insights.

Once in this section, you can filter data. Custom queries help focus on specific issues. Regular checks enable you to stay ahead in SEO. Knowing how often Google crawls your site helps shape your content strategy. Evaluate the data monthly or weekly for optimal results.

Understanding data from the Crawl Report requires attention. First, focus on error URLs. These need immediate correction. Then, examine server errors. Fix them to ensure your site stays live. It is also essential to look at indexed pages. If these numbers fluctuate, it could indicate an indexing problem. You can also use Google’s URL Inspection Tool. This tool gives you more insights about specific URLs. You can see if a page is indexed correctly.

Next, observe crawl statistics over time. Look for patterns that signal improvements or declines. Are there fewer errors this month? Is your crawl rate improving? These questions give deeper insights. Interpreting the data carefully will lead to actionable insights. Over time, this results in better SEO strategies.

| Error Type | Description |

|---|---|

| 404 Error | The page is not found. |

| 500 Error | Server issues lead to failure. |

| Blocked by robots.txt | Page access is denied. |

Addressing these errors is crucial. For 404 errors, create a new page or redirect users. Fix server errors by checking the hosting setup. Ensure that robots.txt allows search engines to crawl important pages. Fixing these errors enhances user experience while boosting SEO.

Utilizing Crawl Report insights effectively can amplify your SEO strategy. First, prioritize fixing high-impact errors. This means addressing errors that prevent users from accessing key pages. Second, create a routine check-up schedule. Regular monitoring keeps issues at bay.

You can also leverage data to refine content. Regularly update old content based on crawling patterns. Remove or replace low-performing content. This helps keep the website fresh & engaging. And another thing, use the data to enhance your internal linking strategy. Good internal links lead to higher SEO rankings.

Lastly, benchmarking against competitors can provide a competitive edge. Compare your crawl data with similar websites. It can reveal missed opportunities. This practice leads to better strategies for improvement. Ultimately, best practices transform crawl report insights into actionable tasks.

Crawl Reports serve as a health check for websites. They signal critical areas needing repair. Each issue, if unresolved, leads to bigger problems. Regular analysis helps maintain your site. Start by focusing on error details:

Understanding these factors helps you prioritize fixes. Fixing errors improves user experience. Improved user experience often leads to better rankings & more traffic. Hence, regular health checks through Crawl Reports are essential for ongoing SEO success.

Several tools can complement your analysis of Crawl Reports. These tools help in diagnosing issues efficiently. They save time & enhance productivity. Some popular options include:

Each tool offers unique features. Google Analytics provides traffic insights. Screaming Frog helps crawl your website for issues. Ahrefs can track backlinks that may affect crawling. Integrating these tools enhances your understanding of site performance. Utilizing this data forms a holistic SEO strategy.

I have often used Google Search Console Crawl Reports for my website. Initially, I faced many crawl errors. I did not know how much it affected my SEO. After monitoring the reports regularly, I found patterns that led to quick fixes. The results were evident in my website's performance. Traffic increased, & my site's visibility improved significantly. Now, I make it a point to check these insights regularly. They guide my content strategy & help maintain user experience.

“You cannot improve what you do not measure. Monitor Google Search Console Crawl Reports for Better SEO Insights.” Mrs. Christa Towne

Consistent monitoring of your Crawl Reports leads to sustained SEO success. Set automated alerts for crawl errors. Ensure you receive notifications for any red flags. This proactive approach allows immediate action. The longer you wait, the more damage it can cause to rankings.

Develop a strategy for ongoing evaluation. Create quarterly reports analyzing crawl data. This helps identify long-term trends. Documenting improvements & failures guides future strategies. Regular audits keep your website in top shape. Continuous improvements bring lasting success.

A crawl report in Google Search Console provides insights into how Google's bots access & index your website. It shows which pages have been crawled, any issues encountered, & the overall health of the crawl process.

Crawl reports are essential for SEO as they help identify issues that may prevent search engines from properly indexing your site. By addressing these issues, you can improve your site's visibility & ranking in search results.

You can access crawl reports by logging into your Google Search Console account, selecting your property, & navigating to the "Coverage" section under "Index." This section will display information about crawl status & errors.

Crawl reports often highlight issues such as 404 errors, server errors, issues with redirects, & pages that are blocked by robots.txt. These issues can impact how well your site is indexed.

To fix crawl errors, you should analyze the type of error & take appropriate action. This could involve correcting broken links, restoring deleted pages, or adjusting your robots.txt file to allow crawling of necessary pages.

This status implies that Google has crawled the page but chose not to index it. Possible reasons include low-quality content, duplicate content, or perhaps the page being relatively new & not yet deemed significant by Google.

Crawl reports in Google Search Console are updated regularly, but the frequency can vary. Factors such as the website’s activity & the changes made to the site can influence how often Google revisits & updates the crawl data.

If you notice a sudden spike in crawl errors, investigate the changes made to your website. Check for updates to URLs, changes in site structure, or server issues that may have occurred around the same time as the spike.

Yes, crawl reports can affect website performance indirectly. If there are significant crawling issues, it can lead to lower indexation rates, meaning fewer pages are shown in search results, which may impact traffic & visibility.

While page speed is not directly reflected in crawl reports, slow-loading pages may lead to crawl delays or errors. Ensuring your site loads quickly can enhance the crawling process & improve SEO outcomes.

The robots.txt file informs search engines which pages or sections of your site should not be crawled. If misconfigured, it can lead to valuable content being excluded from the crawl reports & ultimately not indexed.

Begin by addressing the most critical errors indicated in the crawl reports, such as server errors or 404 pages. Prioritize fixing issues that impact high-traffic pages or affect your site’s overall indexation.

Other tools that can complement Google Search Console for crawl analysis include Screaming Frog, Ahrefs, & SEMrush. These tools can offer more detailed insights into site structure, links, & overall SEO health.

🎉 Biggest Black Friday Deal Ever!

MASSIVE 80% OFF

Unlock unlimited AI power across every plan.

Offer Ends In:

Ends December 10

Use Code: BLACKFRIDAY80